At some point in your (very) recent past — perhaps just a few seconds ago — electrical signals traveled between your brain cells and you made a decision: to start reading this article. This decision is just one of thousands that you make each day. And scientists are hard at work deciphering the mechanisms in the brain that guide those decisions — whether you are choosing what to read, whom to marry or what to eat.

While there has been significant progress in the science of decision making, less is known about how the brain gathers the evidence it needs — and ignores what it doesn’t — to arrive at a decision. Jacqueline Gottlieb, PhD, a neuroscientist at Columbia’s Mortimer B. Zuckerman Mind Brain Behavior Institute and associate professor of neuroscience at Columbia University Medical Center, studies attention and information seeking. Dr. Gottlieb has co-authored a new paper, published this week in Proceedings of the National Academy of Sciences, that for the first time identifies cells that control attention and encode the value of information; in other words, how relevant a piece of information is when making a decision.

We spoke with Dr. Gottlieb about what her latest research has revealed, what it could mean for people with attention deficit disorder and how it might improve artificial intelligence.

What are the big questions that your research seeks to answer?

I am working to understand how the brain decides what to pay attention to, such as what it deems interesting, or important. At present, there are two hypotheses. One states that the brain computes the value of information based only on whether it leads to something valuable down the line. The alternative hypothesis is that the brain calculates how much we are likely learn separately from any rewards we may get.

Can you give an example?

Sure. Let’s say that I’m hungry. I happen to read an article in the newspaper about apples and, as a result, I go to buy the apples. Over time, the newspaper becomes valuable because it leads me to the reward — in this case, getting apples to eat. This is the crux of the first hypothesis.

By contrast, the second hypothesis posits that the newspaper is valuable because it teaches me something new. For instance, I may see an article about a fruit that I will likely never eat, but I want to find out about it anyway. According to this hypothesis, I would read the article simply in order to learn, regardless of any rewards I may get down the line.

So by comparing these two hypotheses, I’m working to understand how the brain places value on sources of information. Answering this question has been at the heart of our research, and what we had hoped to shed light on with this latest study.

How did you go about finding an answer?

In nearly all studies of decision making, the operating premise is that you already have all the information you need to make a decision. But in reality, even seemingly simple decisions (such as what to have for dinner) involve many steps.

For this study, our main innovation was to acknowledge that decision making isn’t a single-step process. Ultimately, of course, you have to decide which action to take. But first, you have to decide which source of information to consult to help guide your action. To reflect this added complexity, we constructed a task that traced animals’ eye movements in two steps.

In the first step, the animals decided which of two visual cues to consult. In the second step, they decided what to do based on the information they received from the cue. The two cues were colored circles on a computer screen, each containing dots that traveled either up or down. After looking at one of the circles, the animal could make their final decision — and choose to look at one of two targets that were separate from the cues. If it chose correctly, it received a juice reward.

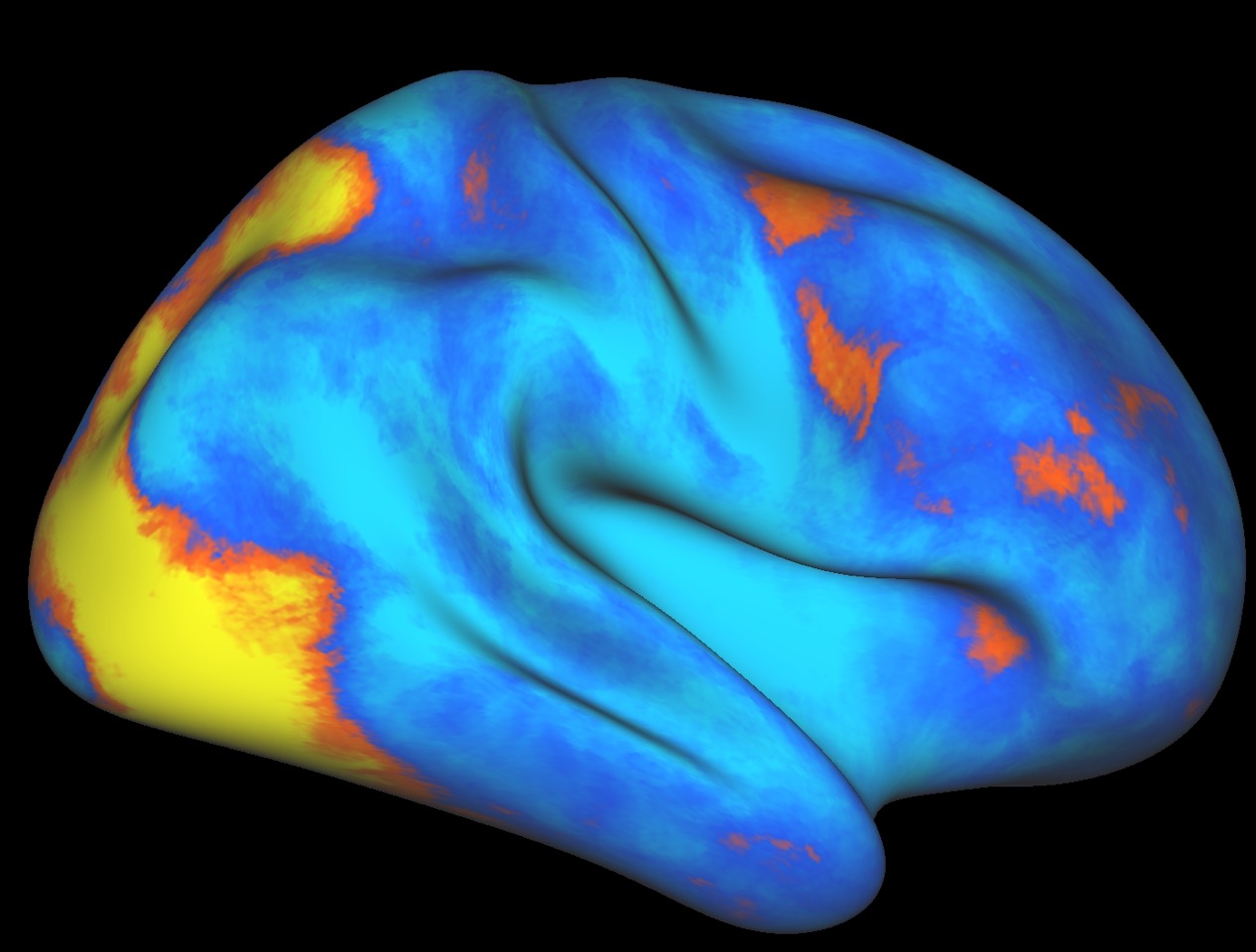

The crux of the experiment was that the moving dots inside the cues pointed toward one or the other target, and gave the animals hints about which target was likely to be correct. In addition, the color of each cue indicated how informative a cue would be; in other words, how reliably its motion would point to the correct target rather than give misleading information. As the animal decided which colored cue to look at, we recorded activity from individual cells in a part of the brain called the lateral intraparietal area (LIP), a region known to be involved in attention control.

What did you find?

Each LIP cell only responds to a particular spot in the visual field, and signals to the rest of the brain to attend, or look at, that spot. We found that the cells showed greater electrical activity when one of the colored circles appeared in its field. And that level of activity depended on how informative the cue was. A variation on the experiment found that the cells were much more sensitive to changes in the amount of information being received than to changes in the expected reward. In other words, these cells valued the information being gathered, regardless of the ultimate reward.

These findings strongly support the second hypothesis I mentioned earlier: that the brain encodes actions that bring information independently of actions that only gain rewards.

How might your findings be applied?

The first application is in medicine. Our studies of how the brain decides what to pay attention to could offer insight into psychiatric and mental disorders that involve deficits of attention, such as attention deficit disorder, depression and drug addiction. Our work reveals how the brain identifies items in our environment that are informative. Cleary, a disruption to this process can impair many actions. For instance, if you cannot identify which words or sentences in a text are important, you will have difficulty understanding the text.

Second, our findings may help improve artificial intelligence. A machine that is capable of learning on its own has to answer the same question we do: What is interesting in my world? Our study showed that, in the brain, there’s a concrete variable that’s being computed in individual cells as these cells value one piece of information over another. We can now program that variable into a machine, and see if that machine will perform better because it is gathering information more efficiently. We are collaborating with Dr. Manuel Lopes and with Dr. Pierre-Yves Oudeyer and his group at Inria, the French National Institute for computer science and applied mathematics. They are very interested in using the answers we are finding in the living brain to improve the design of an artificial one.

###

The paper is titled: “Parietal neurons encode expected gains in instrumental information.” Additional coauthors include first authors Nicholas Foley, PhD, and Simon Kelly, PhD; Himanshu Mhatre, PhD; and Manuel Lopes, PhD.